Preparing aged-care facilities for assessor-led funding under AN-ACC

Telstra Health’s Clinical Manager is a core operational system used daily by residential aged-care providers to manage clinical documentation, administration, and compliance. It sits at the centre of how facilities record care, assemble evidence, and meet regulatory obligations.

Following the Royal Commission into Aged Care Quality and Safety, government reform fundamentally changed how funding decisions are made. Facility systems no longer determined funding outcomes. They remained accountable for the quality and completeness of evidence supporting external assessment. This work updated Clinical Manager to operate effectively within that shift.

Operating environment

Context

Organisation: Telstra Health (Aged & Disability)

Environment: Residential aged-care software platform (Clinical Manager)

Users: Clerical and clinical staff preparing residents for assessment

Role: Senior UX Designer

Timeframe: 6-month delivery window

Constraints

Government-defined funding and assessment model

Classification authority external to providers

Long-established platform with embedded legacy workflows

High audit and compliance exposure

At a glance

System shift: System shift: From provider-led funding calculation to assessor-aligned preparation

Capability: Evidence traceability organised by national assessment structure

Scope: Assessment preparation embedded within a live, legacy care platform

Assessment authority shifted outside facilities

PART 1

Policy context and reform drivers

The Royal Commission into Aged Care Quality and Safety identified systemic issues in how residential aged-care funding was assessed and allocated, including inconsistent classification practices and incentives that did not always align with resident need.

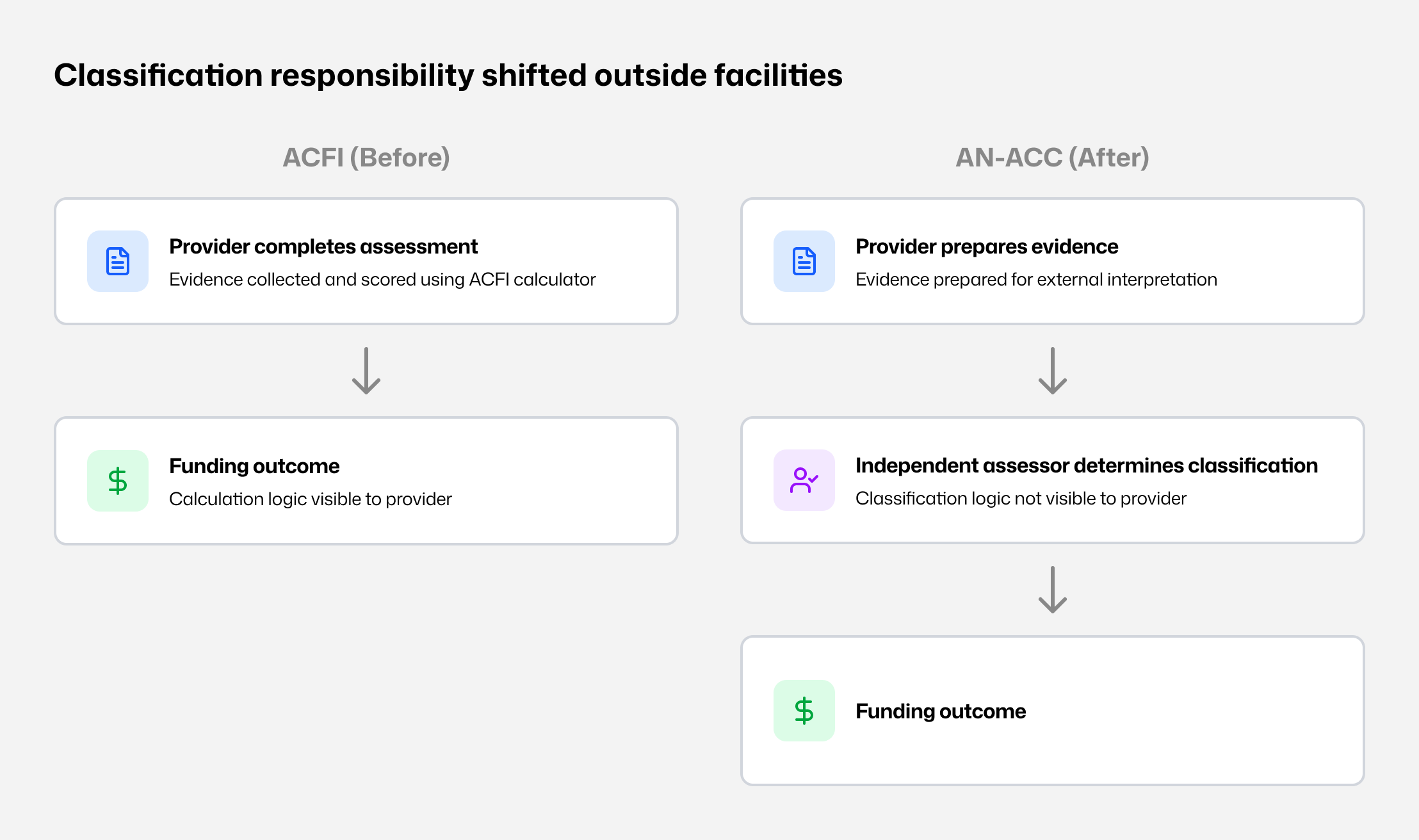

In response, the Australian Government replaced the Aged Care Funding Instrument (ACFI) with the Australian National Aged Care Classification (AN-ACC). The reform aimed to standardise assessment nationally and remove funding determination from provider control.

This was not a product change.

It was a structural shift in responsibility and accountability.

The ACFI operating model

Under ACFI:

Assessments were completed internally.

Evidence was collected, scored, and reviewed in-house.

Funding outcomes could be estimated using an ACFI calculator.

Calculation logic was visible to providers.

This transparency shaped both workflow and behaviour. Because funding outcomes could be anticipated, facilities organised assessment practices around known scoring mechanics. During the Royal Commission, this dynamic was cited as a risk to equity and consistency, including optimisation patterns around residents whose needs aligned to higher funding bands.

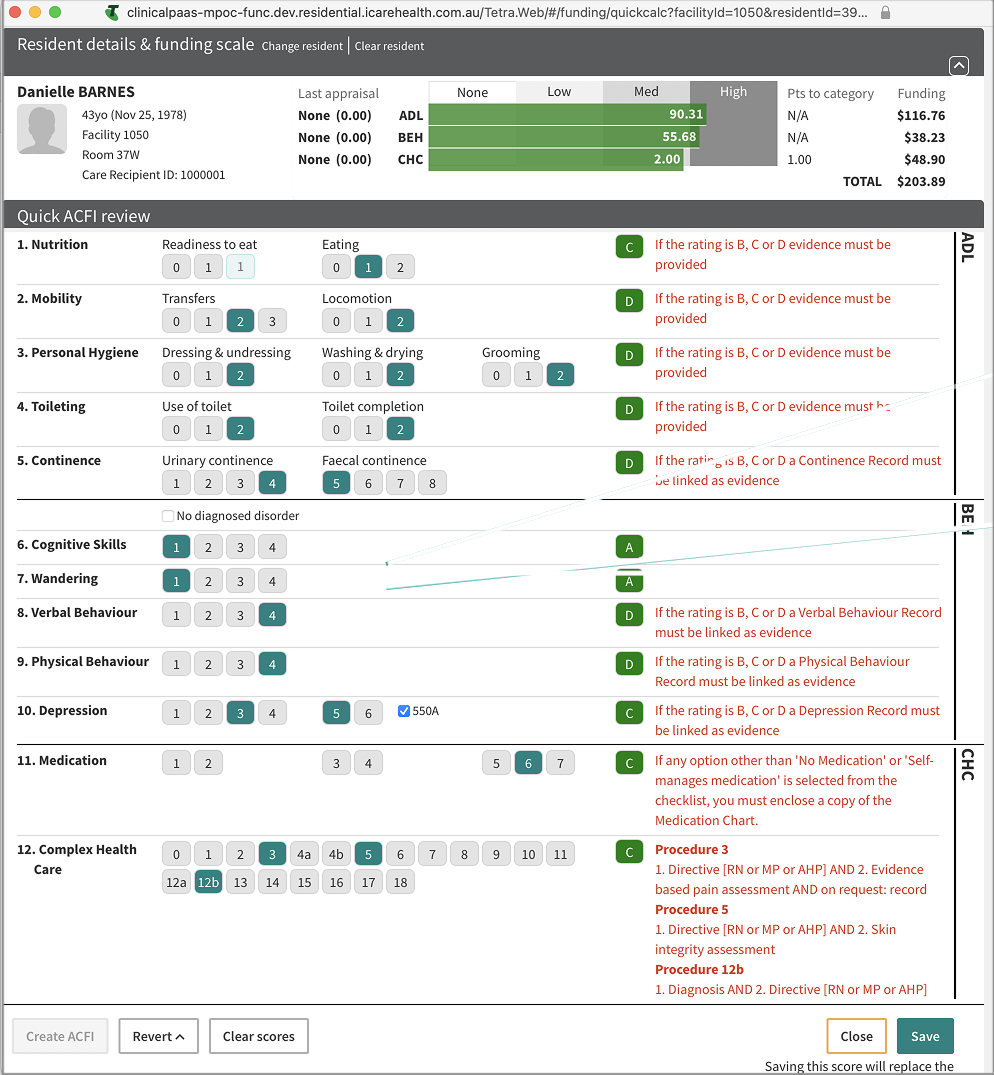

Clinical Manager reflected this model through calculator-led workflows and score-driven interpretation.

What AN-ACC changed

AN-ACC shifted assessment responsibility away from providers.

Under the new model:

Independent assessors apply a national assessment tool.

Classifications are no longer determined internally.

Funding outcomes depend on assessor interpretation of submitted evidence.

Classification logic is not visible to providers.

Internal systems shifted from assessment to preparation.

Early interviews with service managers raised concerns about funding risk, assessor interpretation, and the adequacy of existing clinical documentation.

Visual 1 - Assessment authority shift Assessment moved from provider-led scoring to external classification under AN-ACC.

Loss of calculation visibility

At the time of rollout, the detailed logic used by assessors to determine classifications under AN-ACC was not available to providers. Providers could no longer see how individual data points contributed to funding outcomes.

Preparation quality now depended entirely on whether assessors could:

locate relevant evidence,

understand its context, and

interpret resident need accurately from the documentation provided.

This significantly increased the importance of structured, complete, and easily retrievable evidence.

Resulting system mismatch

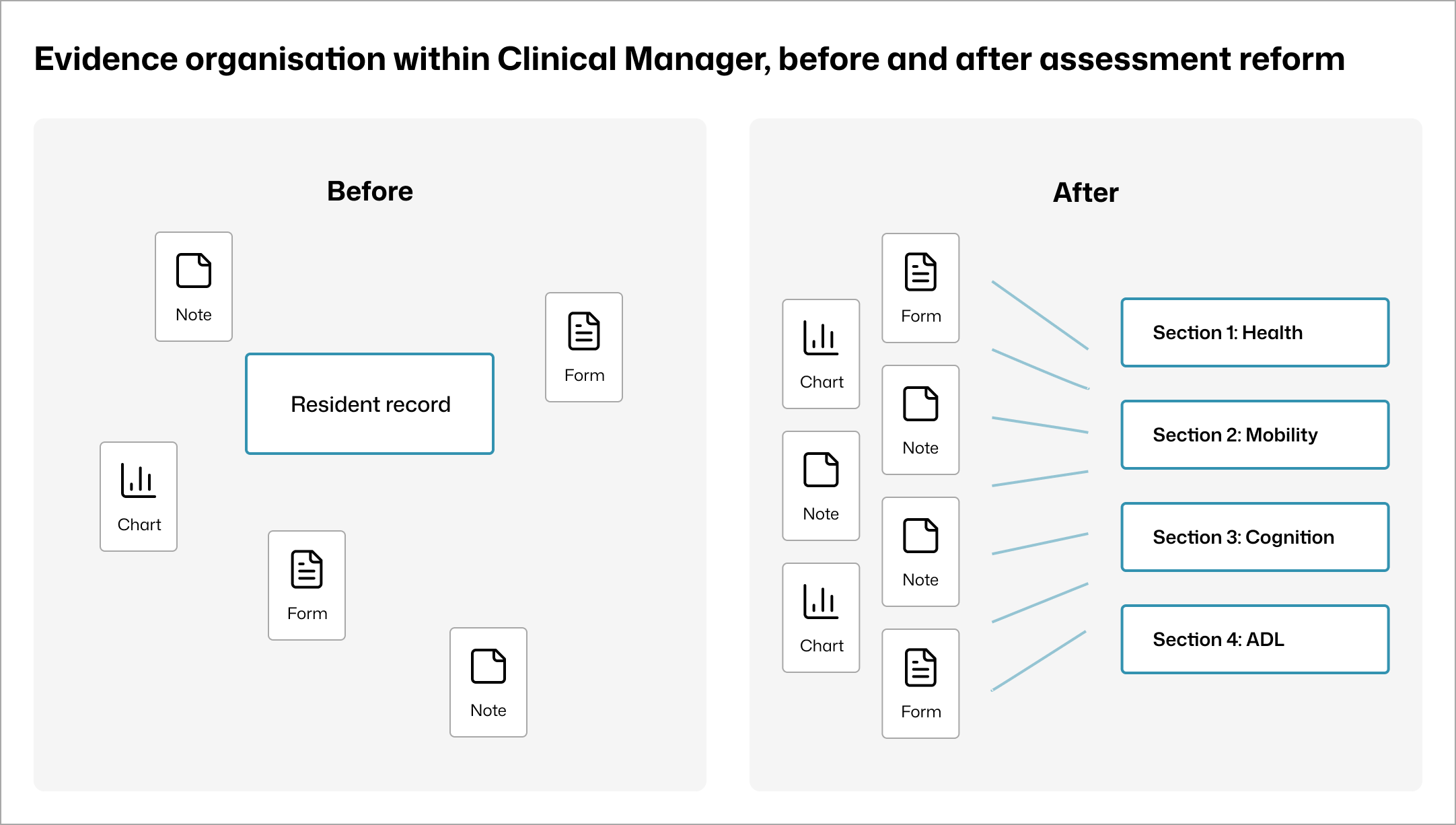

Inside Clinical Manager, the reform exposed a structural misalignment between existing workflows and the new assessment model:

Existing workflows reinforced a scoring mindset that no longer applied.

Clinical evidence existed across notes, charts, and forms, but was difficult to assemble by assessment section.

Calculator patterns implied predictability and control that facilities no longer had.

The primary risk was not usability.

The risk was preparing staff for a process that no longer existed.

Visual 2 - Evidence without assessment structure Clinical documentation was not organised by AN-ACC assessment sections.

Insight

Staff were not seeking ways to influence outcomes. They were seeking clarity on how to prepare evidence that would withstand external review.

Design requirement

Clinical Manager needed a preparation system shaped around the assessor lens, with evidence explicit, traceable, and reviewable without implying control over classification.

Research grounding

Interviews with clerical and clinical staff across multiple residential aged-care facilities during the transition period surfaced consistent concerns:

uncertainty about how assessors would interpret documentation,

difficulty locating and presenting relevant evidence under time pressure,

fear of omission during assessment preparation,

strong preference for predictable, stepwise preparation.

Shaping a preparation system aligned to assessor interpretation

PART 2

System intent

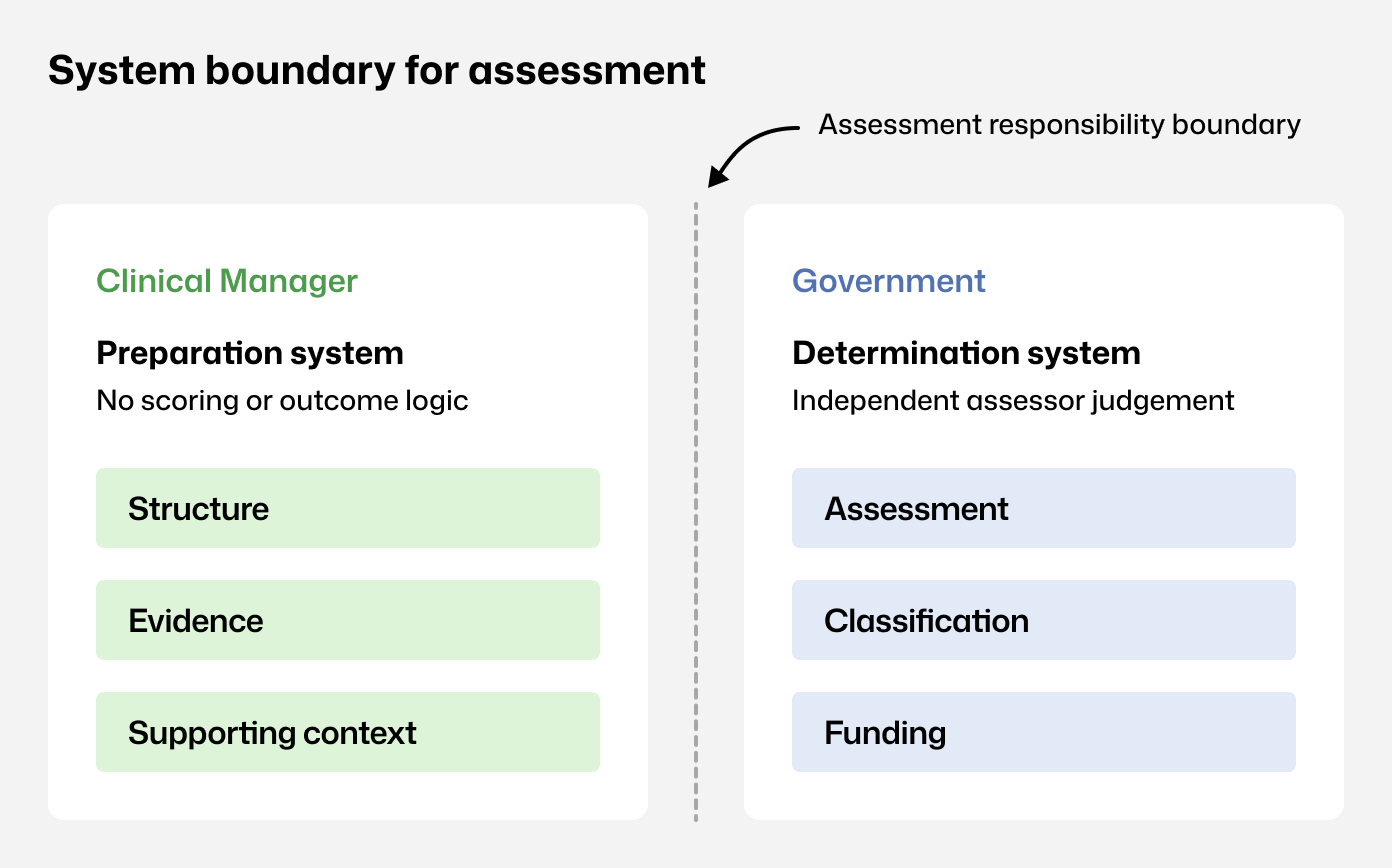

Before making interface decisions, the system boundary had to be explicit.

The system would:

support preparation for assessment,

reflect the structure of the AN-ACC assessment tool,

surface evidence in assessment context.

The system would not:

determine classifications,

predict funding outcomes,

reproduce ACFI optimisation patterns.

This boundary framed all decisions that followed.

Visual 3 - Assessment responsibility boundary Clinical Manager supports preparation; assessment, classification, and funding sit with government assessors.

Structural decisions

1. Do not repurpose ACFI calculator patterns

ACFI calculators encouraged score-first behaviour. Under AN-ACC, this became misleading and increased compliance risk.

Decision

Avoid optimisation patterns that imply control over classification.

Visual 4 - Legacy ACFI solution Calculator-led workflows reinforced internal scoring rather than assessor-aligned preparation.

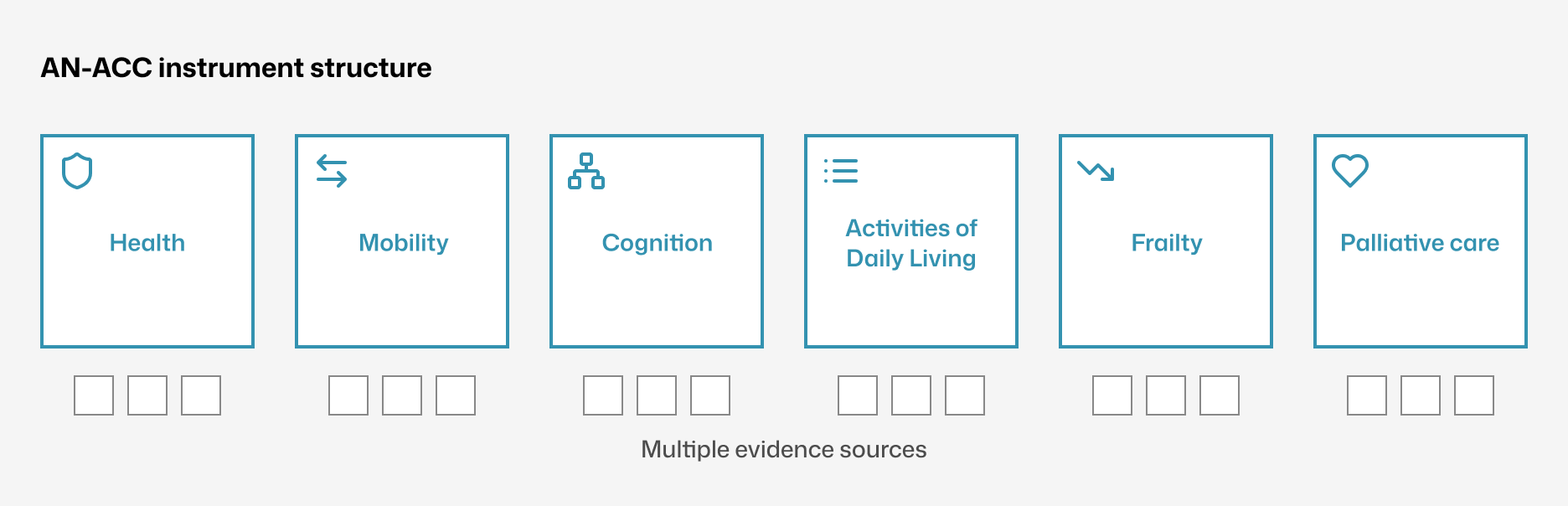

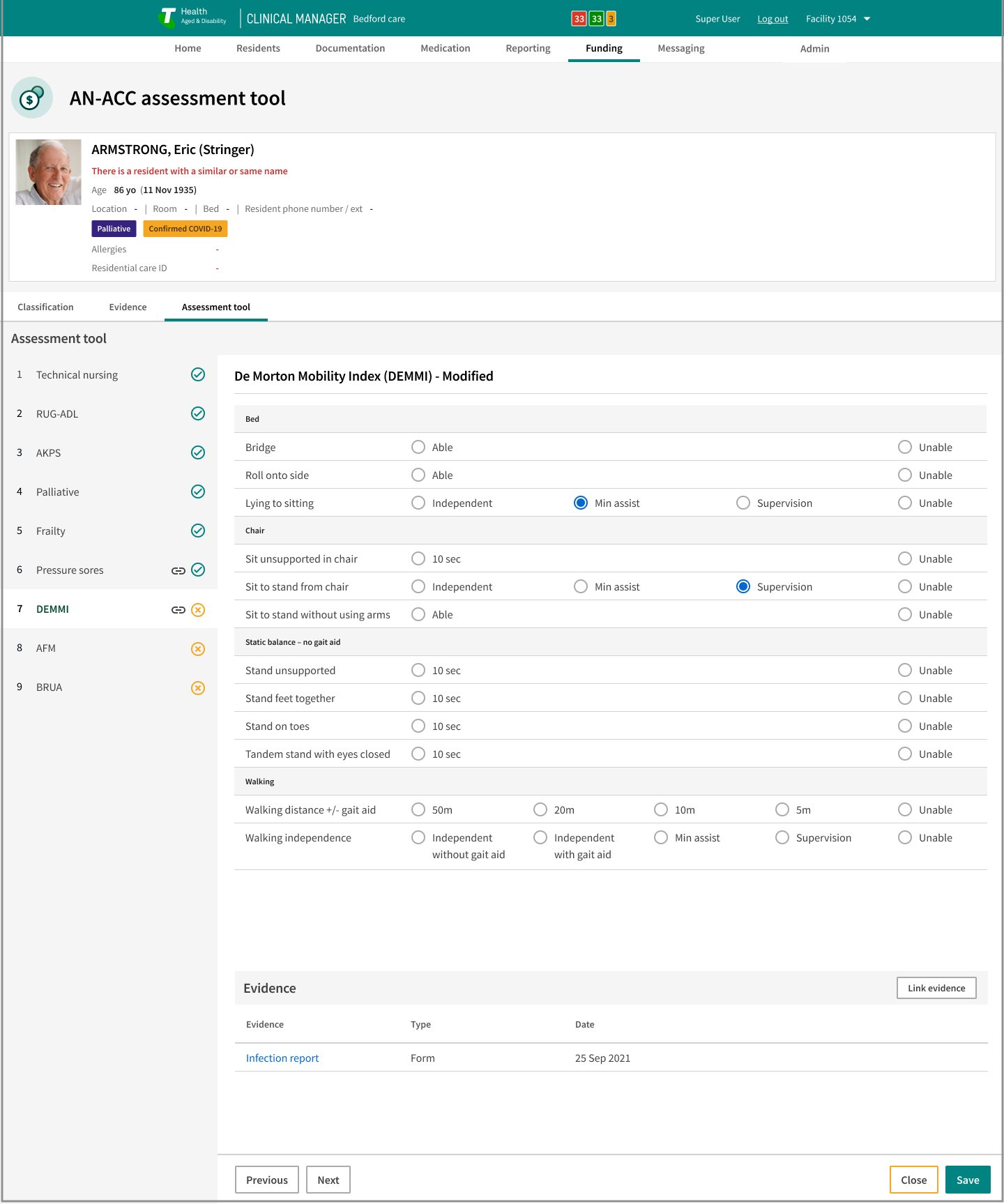

2. Mirror AN-ACC instruments as the primary information architecture

AN-ACC is composed of distinct instruments with specific evidence expectations. Using this structure as navigation created a shared definition of completeness and reduced the risk of missed sections.

Visual 5 - AN-ACC instrument–led navigation Navigation mirrors the national AN-ACC instrument structure, grouping evidence under each assessment section.

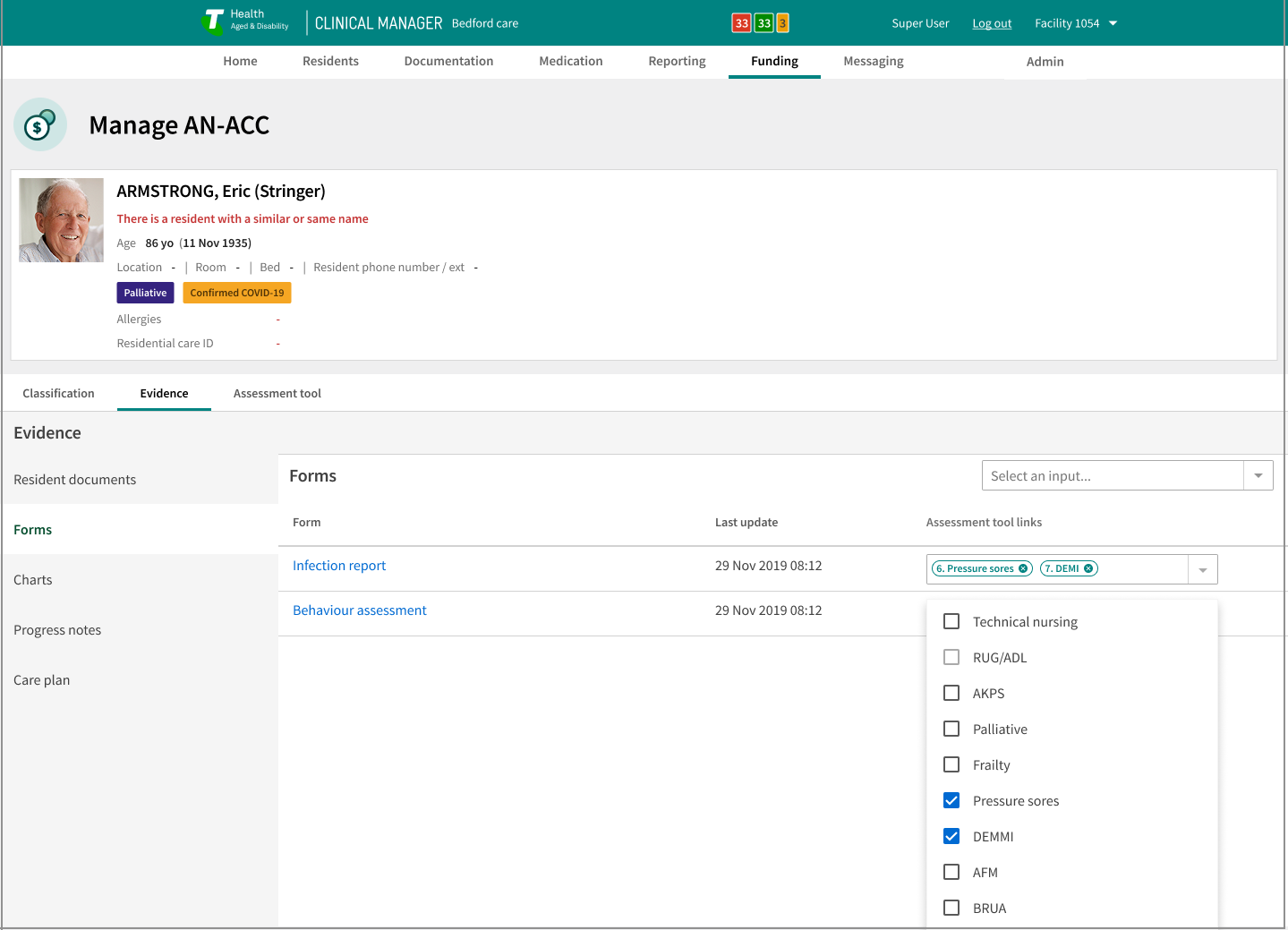

3. Make evidence linkable at the assessment section level

Facilities needed to show assessors where supporting documentation lived without duplicating records. Evidence remained part of the resident record while becoming linkable to assessment sections.

Visual 6 - Section-linked evidence within the resident record Evidence remains in the resident record and is explicitly linked to assessment sections, making coverage visible without duplicating documentation.

4. Keep contextual note capture inside the assessment flow

Preparation surfaced judgements and observations that needed recording without losing place. In-flow capture reduced task switching and preserved assessment context.

User experience prioritisation

Predictability governed the UX:

stable navigation by assessment section,

consistent layouts across instruments,

visible evidence status in context,

linear progression over flexible branching.

This reduced decision load during preparation while staff continued routine care.

What was rejected

Facility-side scoring or funding prediction tools

Free-form evidence repositories detached from assessment structure

Parallel funding packs outside the resident record

Global evidence visibility without role-based controls

Each increased risk by weakening traceability, implying outcome control, or exposing sensitive resident information beyond clinical need.

These patterns conflicted with the AN-ACC separation of preparation and determination.

Capability created and supporting evidence

PART 3

Capability created

Assessor-aligned preparation structure inside Clinical Manager

Clear separation between preparation and external determination

Evidence

Structural impact

One preparation flow replaced four to five disconnected screens

Assessment sections and linked evidence presented together in one view

Increased staff confidence during readiness activities

Measured outcomes

Evidence lookup time reduced by ~70% (from 6–10 minutes to under 2 minutes)

Reduced follow-up clarification after assessment preparation

Visual 7 - Assessment preparation in practice Implemented AN-ACC preparation interface in active use.

Outcome

Facilities regained control over preparation quality without implying control over classification outcomes.

What was not proven

Classification accuracy

Funding outcomes

Long-term documentation behaviour change

Reflection

AN-ACC reframed funding preparation as an interpretation and evidence problem.

By aligning system structure to the assessor lens and making evidence explicit inside the resident record, the platform implemented a preparation model. That structure supports reform-driven change without reworking the underlying care workflows.